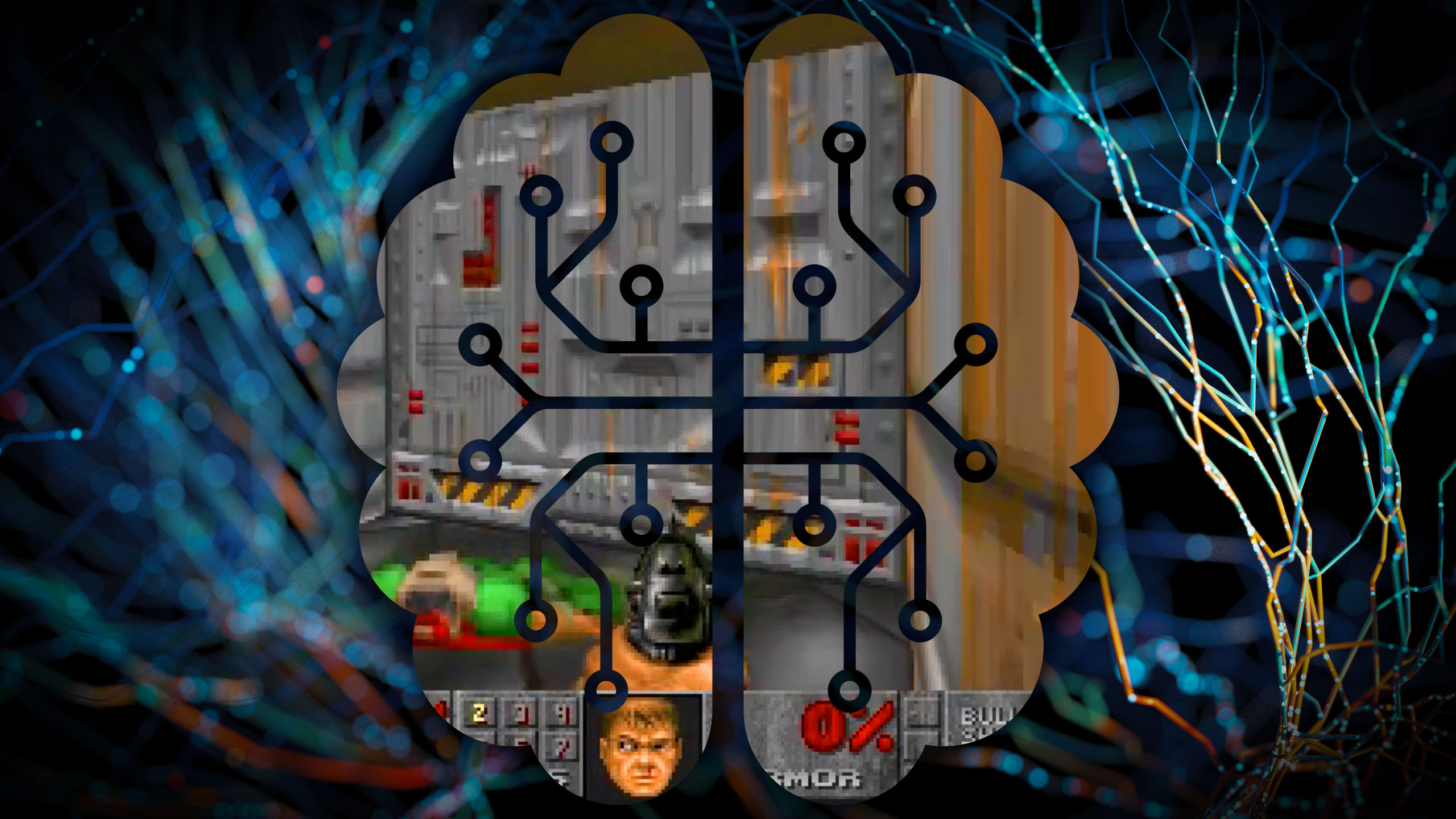

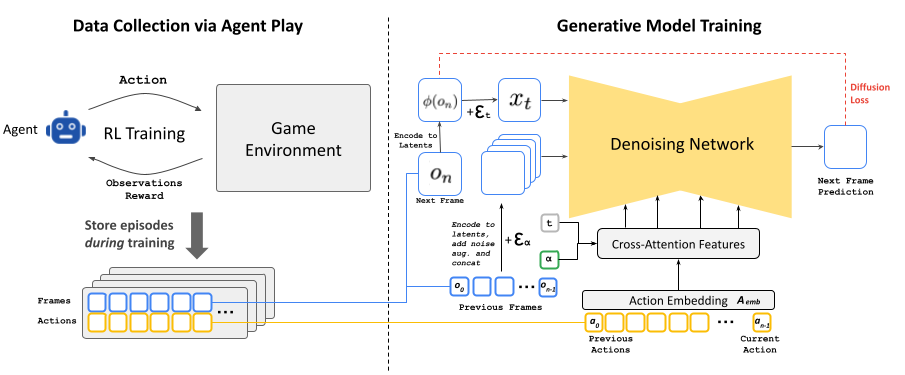

On Tuesday, researchers from Google and Tel Aviv University unveiled GameNGen, a new AI model that can interactively simulate the classic 1993 first-person shooter game Doom in real time using AI image generation techniques borrowed from Stable Diffusion. It's a neural network system that can function as a limited game engine, potentially opening new possibilities for real-time video game synthesis in the future.

For example, instead of drawing graphical video frames using traditional techniques, future games could potentially use an AI engine to "imagine" or hallucinate graphics in real time as a prediction task.

"The potential here is absurd," wrote app developer Nick Dobos in reaction to the news. "Why write complex rules for software by hand when the AI can just think every pixel for you?"

GameNGen can reportedly generate new frames of Doom gameplay at over 20 frames per second using a single tensor processing unit (TPU), a type of specialized processor similar to a GPU that is optimized for machine learning tasks.

In tests, the researchers say that ten human raters sometimes failed to distinguish between short clips (1.6 seconds and 3.2 seconds) of actual Doom game footage and outputs generated by GameNGen, identifying the true gameplay footage 58 percent or 60 percent of the time.

Real-time video game synthesis using what might be called "neural rendering" is not a completely novel idea. Nvidia CEO Jensen Huang predicted during an interview in March, perhaps somewhat boldly, that most video game graphics could be generated by AI in real time within five to 10 years.

GameNGen also builds on previous work in the field, cited in the GameNGen paper, that includes World Models in 2018, GameGAN in 2020, and Google's own Genie in March. And a group of university researchers trained an AI model (called "DIAMOND") to simulate vintage Atari video games using a diffusion model earlier this year.

Loading comments...

Loading comments...