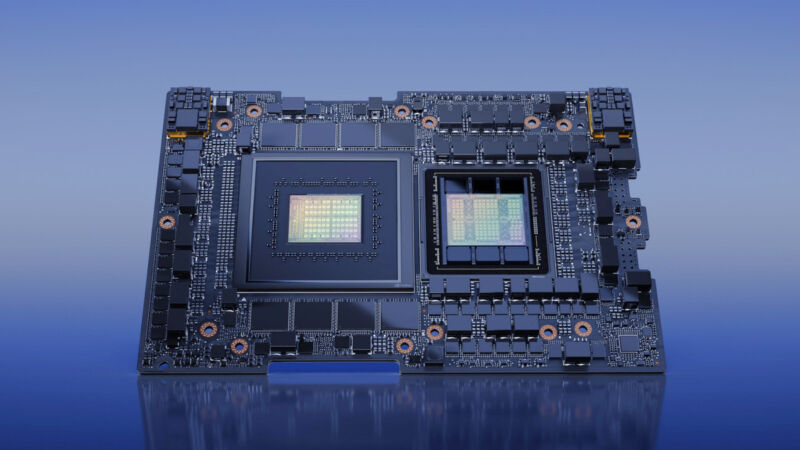

Early last week at COMPUTEX, Nvidia announced that its new GH200 Grace Hopper "Superchip"—a combination CPU and GPU specifically created for large-scale AI applications—has entered full production. It's a beast. It has 528 GPU tensor cores, supports up to 480GB of CPU RAM and 96GB of GPU RAM, and boasts a GPU memory bandwidth of up to 4TB per second.

We've previously covered the Nvidia H100 Hopper chip, which is currently Nvidia's most powerful data center GPU. It powers AI models like OpenAI's ChatGPT, and it marked a significant upgrade over 2020's A100 chip, which powered the first round of training runs for many of the news-making generative AI chatbots and image generators we're talking about today.

Faster GPUs roughly translate into more powerful generative AI models because they can run more matrix multiplications in parallel (and do it faster), which is necessary for today's artificial neural networks to function.

The GH200 takes that "Hopper" foundation and combines it with Nvidia's "Grace" CPU platform (both named after computer pioneer Grace Hopper), rolling it into one chip through Nvidia's NVLink chip-to-chip (C2C) interconnect technology. Nvidia expects the combination to dramatically accelerate AI and machine-learning applications in both training (creating a model) and inference (running it).

“Generative AI is rapidly transforming businesses, unlocking new opportunities and accelerating discovery in healthcare, finance, business services and many more industries,” said Ian Buck, vice president of accelerated computing at Nvidia, in a press release. “With Grace Hopper Superchips in full production, manufacturers worldwide will soon provide the accelerated infrastructure enterprises needed to build and deploy generative AI applications that leverage their unique proprietary data.”

According to the company, key features of the GH200 include a new 900GB/s coherent (shared) memory interface, which is seven times faster than PCIe Gen5. The GH200 also offers 30 times higher aggregate system memory bandwidth to the GPU compared to the aforementioned Nvidia DGX A100. Additionally, the GH200 can run all Nvidia software platforms, including the Nvidia HPC SDK, Nvidia AI, and Nvidia Omniverse.

Notably, Nvidia also announced that it will be building this combo CPU/GPU chip into a new supercomputer called the DGX GH200, which can utilize the combined power of 256 GH200 chips to perform as a single GPU, providing 1 exaflop of performance and 144 terabytes of shared memory, nearly 500 times more memory than the previous-generation Nvidia DGX A100.

The DGX GH200 will be capable of training giant next-generation AI models (GPT-6, anyone?) for generative language applications, recommender systems, and data analytics. Nvidia did not announce pricing for the GH200, but according to Anandtech, a single DGX GH200 computer is "easily going to cost somewhere in the low 8 digits."

Overall, it's reasonable to say that thanks to continued hardware advancements from vendors like Nvidia and Cerebras, high-end cloud AI models will likely continue to become more capable over time, processing more data and doing it much faster than before. Let's just hope they don't argue with tech journalists.

reader comments

124