"Douchey uses of AI"

« previous post | next post »

The book for this year's Penn Reading Project is Cathy O'Neil's Weapons of Math Destruction. From the PRP's description:

We live in the age of the algorithm. Increasingly, the decisions that affect our lives—where we go to school, whether we get a car loan, how much we pay for health insurance—are being made not by humans but by mathematical models. In theory, this should lead to greater fairness: everyone is judged according to the same rules, and bias is eliminated.

But as Cathy O’Neil reveals in this urgent and necessary book, the opposite is true.

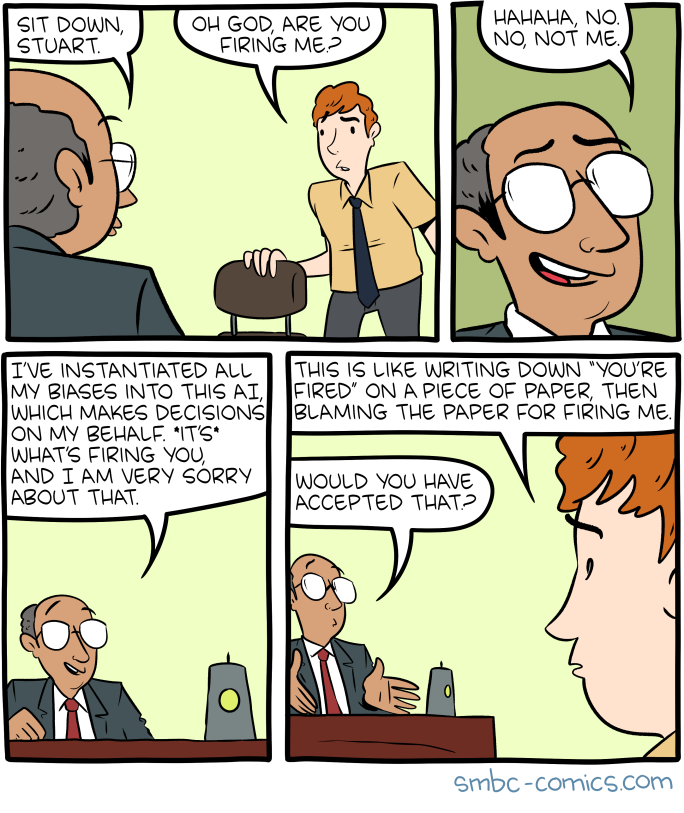

I've been seeing lots of resonances of this concern elsewhere in popular culture, for example this recent SMBC, which focuses on the deflection of responsibility:

The mouseover title: "Ever since I got an early copy of Janelle Shane's new book, 80% of my jokes have been about douchey uses of AI."

The cited book is You Look Like a Thing and I Love You: How Artificial Intelligence Works and Why It's Making the World a Weirder Place, which is more about why AI is not really very intelligent (along the lines of our elephant semifics category), rather than why AI is a threat to democracy and justice.

The aftercomic:

Another relevant SMBC strip here.

A relevant recent opinion piece — Olaf Groth, Mark Nitzberg, and Stuart Russell, "AI Algorithms need FDA-style drug trials", Wired 8/15/2019. (Though it's not clear to me why this applies specifically to AI, how you could do realistic trials given the importance of network effects, or how you could prevent it from becoming an innovation-strangling bureaucracy.)

Gregory Kusnick said,

August 16, 2019 @ 12:46 pm

Are Groth, Nitzberg, and Russell engaging in the same sort of blame-shifting as the boss in the comic?

"…in California, a regulation that requires bots to self-identify as such and makes it illegal to deploy bots that knowingly deceive others in an attempt to encourage a purchase or influence a vote."

Philip Taylor said,

August 17, 2019 @ 1:50 am

I have not previously encountered the adjective "douchey", and keep mis-reading it as "duchy" (living in the Duchy of Cornwall, this is perhaps not surprising), but (a) what does it mean (I assume it to be pejorative), and how is it to be pronounced ? Is it /duːʃ i/, /daʊtʃ i/, or something else ?

Chas Belov said,

August 17, 2019 @ 2:12 am

Wiktionary spells it douchy, which is the adjectival form of the noun douche, which is a pejorative short form of the pejorative "douche bag," which apparently means an obnoxious person.

Bob Ladd said,

August 17, 2019 @ 3:00 am

The expression douche bag meaning an obnoxious person has been around at least since the 60s. I used it regularly as an undergraduate in the mid-60s, and I was surprised when it showed up fairly regularly in my son's blog in the early 2000s. I was one of three authors of a large questionnaire-based study of undergraduate slang in 1968 (my last year as an undergraduate) and we got a small number of douche (bag) responses to two questionnaire items, 'an obnoxious person' and 'a person who always does the wrong thing'. However, these responses came mostly from men, only from 5 of the 13 colleges and universities we surveyed, and the word doesn't show up in the 1975 edition of Wentworth and Flexner's Dictionary of American Slang. (It does show up in Pam Munro's 1983 survey of UCLA slang.) I have the impression – possibly unjustified – that it's still pretty restricted. But it's remarkable, given the supposed ephemerality of slang, that the word has continued to be used in more or less the same meaning by more or less the same kind of people for more than half a century.

Tom Saylor said,

August 17, 2019 @ 6:28 am

I was struck by the use of ‘instantiate’ with ‘into’ to mean something like ‘incorporate’ or ‘instill’. In my experience (admittedly limited to discussions of Platonic metaphysics long ago), ‘instantiate’ has always meant something like ‘exemplify (an abstact quality)’. A statue instantiates beauty, but a sculptor doesn’t instantiate beauty into a statue. Is ‘instantiate into’ a recent development?

Trogluddite said,

August 17, 2019 @ 8:15 am

> "In theory, this should lead to greater fairness: everyone is judged according to the same rules, and bias is eliminated."

If you take as a starting point the fallacy that merely *applying* a set of rules impartially is sufficient to ensure impartial outcomes, without considering biases in the rules themselves, then biased outcomes are hardly surprising, no matter who or what is making the decisions.

Given how often I've seen "jobsworth" bureaucrats, politicians, business leaders, the judiciary, etc. criticised for applying the letter of the law rather than its perceived intent, and the prevalence of the "computer says no" meme, I've long been astonished by how easily many people seem to fall prey to the above line of reasoning as soon as a technological decision maker is introduced.

I'm always reminded of the (apocryphal?) tale of Charles Babbage being asked in all seriousness by members of the UK Parliament whether his mechanical calculating engines would be able to spit out the right answer to a mathematical problem when fed incorrect input data.

Twill said,

August 17, 2019 @ 9:22 am

@Trogluddite

It all comes down to the idiotic but frighteningly pervasive notion that computers are hyperrational beings, not exceptionally intricate and versatile machinery (I blame science fiction writers/enthusiasts and the ubiquitous philosophical idea that humans are ourselves fleshy machines). That at every level everything a computer does is entire the work of human hands is massively undermined by the constant gawking at how "smart" and "intelligent" computers are, often feeding into the fantasy of a future ruled by sentient computers possessing transcendental intellect. The reality that, say, a glorified merit calculator is probably just adding inane data points according to a meaningless algorithm made by a hapless programmer is fairly obvious if you possess some level of technological nous, but to someone for who computers may as well run on fairy dust, the program is an impersonal, emotionless (and thus perfectly "scientific") mind making cold, rational inferences.

ktschwarz said,

August 17, 2019 @ 12:00 pm

instantiate into: This seems to be mostly a computer science usage, e.g. "this algorithm is instantiated into a standard list of machine operations". Google Books found examples going back to 1981. Philosophers and linguists prefer "instantiate in", e.g. "The universal form may have existed before being instantiated in a particular piece of matter (because it may have been instantiated in another)".

The association of "instantiate", bias, and AI has a precedent in this 2014 article by a cognitive science professor:

It goes on to describe what LL would call "elephant semifics" as the bias of speech-recognition software toward interpreting all sounds as English sentences.

Gregory Kusnick said,

August 17, 2019 @ 12:17 pm

"at every level everything a computer does is entire the work of human hands"

It seems to me that something close to the opposite is true. At almost every level, from chip design to circuit board layout, to the translation and optimization of human-readable algorithms into executable machine code, to the way AlphaGo taught itself to play, most of the heavy lifting is done by automated algorithms in which human hands play little or no role, and human comprehension of the details is not a requirement.

And algorithms that try to make sense of noisy or garbled input are scarcely a fantasy; I'm interacting with one right now as I type this text into my phone.

Chas Belov said,

August 17, 2019 @ 2:35 pm

I hear "douche bag" fairly often in San Francisco in general usage among, I think, people in their 20s-40s.

Chas Belov said,

August 17, 2019 @ 2:45 pm

I also did an ngram for "douchebag,douche bag" – of course we can't tell usage for that, so I searched Google Books and it turned up in multiple book titles, including a series.

Bob Ladd said,

August 17, 2019 @ 3:37 pm

@Chas Belov: Thanks! From its beginnings in the 60s (or perhaps 50s), douchebag has clearly escaped from the campus and gone mainstream. But it still looks like it's largely restricted (as you suggest) to people who haven't yet reached middle age, and probably also (as the book titles suggest) to a middle-class milieu.

Windowless Monad said,

August 17, 2019 @ 5:44 pm

@Gregory Kusnick: the point is that every one of those 'automated algorithms' is the product of human effort. And as such, expresses at some level the preconceptions, assumptions, and biases of their creators.

peterv said,

August 17, 2019 @ 6:00 pm

> "In theory, this should lead to greater fairness: everyone is judged according to the same rules, and bias is eliminated."

In addition to @Trogluddite’s argument, there is a very good argument that judging different people by the same rules is inherently unfair, since standard rules cannot take account of individual features or differences. When Cambridge University switched from oral to written examinations in mathematics in the 1770s, the opponents of written exams made this same argument: How can it possibly be fair to ask the same questions of everyone, since only questions tailored to each individual student could adequately assess their individual mathematical ability?

Andrew Usher said,

August 18, 2019 @ 10:05 am

The question should be not whether a particular method is 'fair' but whether it is more fair than the alternative. It would be lying to pretend that any process in life is perfectly fair, and many things deviate a lot more than what gets complained about.

But of course the rules themselves can be biased, indeed they must be, as decisions must be made to set them. When the rules are known to everyone, though, obvious biases are eliminated. The problem with this new type of algorithm is that the rules are treated as a trade secret – and _that_ is where they can lack any fairness advantage over more arbitrary procedures also regarded as secret. Possibly they could be worse as no one feels individual responsibility for them – the attitude shown in this cartoon – but I am convinced that that is a problem with all organisations, no matter what method they use.

So I'm not especially worried about this, but I do think some transparency should be demanded in matters that have a major impact on peoples' lives.

k_over_hbarc at yahoo.com

Rodger C said,

August 18, 2019 @ 11:48 am

One law for the Lion & Ox is Oppression.

Mary Kuhner said,

August 21, 2019 @ 5:52 pm

Many people in the chess community have pored over the games played between the previous human-designed chess AI Stockfish and the new self-taught AI AlphaZero. It does appear that Stockfish incorporates a lot of human understandings about how to play the game which AlphaZero markedly lacks. In particular, compared to AlphaZero both Stockfish and (most) human players show a strong attachment to the estimated value of their pieces, and are quite reluctant to give up pieces or exchange greater for lesser pieces without clear compensation. AlphaZero sometimes plays as though no one ever told it the values of the pieces (and indeed, no one ever did).

It's claimed that the current (human) World Champion is studying AlphaZero's games in the hopes of improving his own play, and he did have a string of very nice wins in a more "loose" style recently that might be the AI's influence.

It's true in general that AI incorporates the biases of its authors, but due to the way it arose, AlphaZero does seem to be a striking exception. –Though in other respects it does seem to share our experiences. Its authors tracked what opening variations it preferred throughout its training period. It frequently used the French Defense when it was around my playing strength, and then rejected it later. The same thing happened in human chess: the French Defense used to be a staple in World Championship matches but is now seldom seen at the top levels, though it continues to be popular among amateurs.